Set up Sensor Fusion

Upload and set up RV (Reference viewer) images.

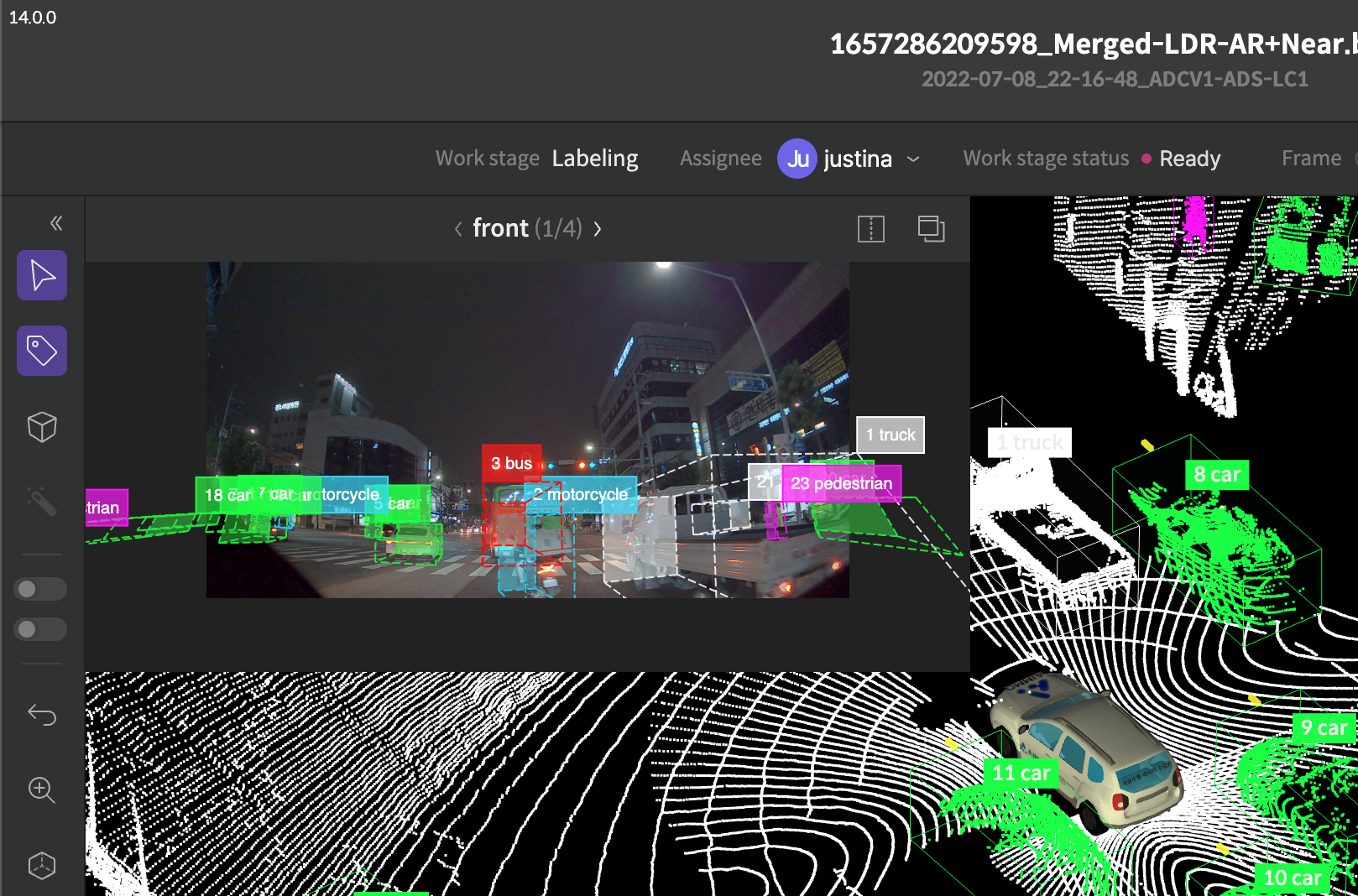

What is a Sensor Fusion image?

It is a LiDAR 3D Cuboid task type that provides a sensor fusion function that allows you to check camera images taken at the same time when labeling. The camera image viewer displayed at this time is called RV (Reference Viewer).

It is necessary to adjust the position and angle of view because the LiDAR sensor and the camera that shoots RV images do not collect information from the same location. The setting value that corrects this error to be matched is called the 'Calibration' value.

- To utilize the Sensor Fusion function, you need to upload additional RV images and calibration values (or files) to the existing 3D Cuboid work file.

Folder structure of Sensor Fusion

RV images, GT data for RV images, and scene calibration data should be organized in separate folder structures.

- __RV__{title} : A reference image for the work target.

- __GT__{title} : GT Data about reference image

- __META__ : Data to be mapped to scene calibration information

- __META__{title} : Data to be mapped to frame calibration information

- Items that can be fit in as title

- front

- rear

- right

- left

- front_right

- front_left

- rear_right

- rear_left

- left_front

- left_rear

- right_front

- right_rear

- The RV, GT, and META files that will be matched to the bin must have the same name as the bin file name.

- RV images are applied only when they are uploaded simultaneously with the 3D Cuboid bin.

- RV image extension supports jpg and png.

1. __RV__{title}

- Reference image about the task target(frames)

- Examples:

/scene/__RV__front/a.png- The extension must be the same in the same folder.

2. __GT__{title}

- GT data about the reference image

- Examples:

/scene/__GT__front/a.json - GT is limited to the AIMMO GT format

- Mandatory items: filename, parent_path, annotations, attributes

- Ignore when other items are included, and when it doesn’t meet the format, it is not available to be found.

- Only bbox, polygon, and poly segmentation types can be found(reference) for annotation type.

- fIt is not seeking mapping target by filename and parent path, therefore when uploading folder structure matches, it doesn’t have any problem but for the cross-check, it is added.

- Mandatory items: filename, parent_path, annotations, attributes

- The example of JSON file.

- Parent path and file name are the Information criteria for reference that will be mapped.

{ "parent_path": "/scene/__RV__front", "filename": "a.png", "annotations": [], "attributes": {} }

3. __META__

- Data that will be mapped as scene calibration

- Save the file name as a reference view name.

- Examples:

/test/__META__/front.json - Examples of JSON file

{ "rv_type" : "front", "rotations": [0.032833,0.083,-0.009749], "translations": [0.65757,-0.2875,-0.546727], "intrinsic_camera_parameters": [2636.504955,2607.765140,1449.383497,945.305018,-0.355957,0.152205,-0.001168,0.002894] "camera_mode_type": "pinhole", "type": "type_m" }

Metadata file format

Name | Type | Required or not | A note |

|---|---|---|---|

ratations | Listck:para | O | Fix the length of the array to 3 |

translations | Listck:para | O | Fix the length of the array to 3 |

intrinsic_camera_parameters | Listck:para | O | Fix the length of the array to 8 |

camera_mode_type | String | X | Choose one between the pinhole and the fisheye. |

type | String | X | Choose one among the Standard, type_m, and remote |

4. __META__{title}

- It is the data that will be mapped as information on frame calibration.

- Save the file name as the frame name that will be mapped.

- test.bin ↔ test.json

- Examples:

/scene/__META__front/a.json

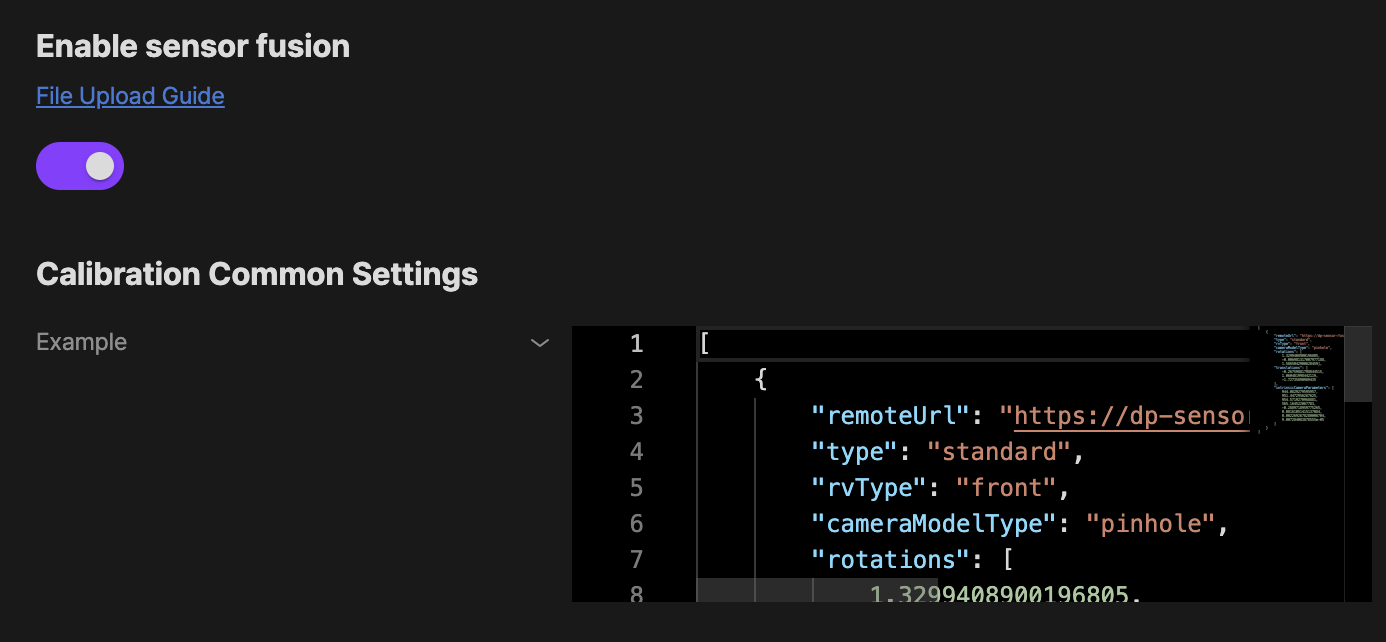

Activate Sensor Fusion

Activate Sensor Fusion

Go to the studio settings tab of the labeling settings menu and select activate Sensor Fusion to activate the Sensor Fusion function.

Calibration common settings

Sets calibration values that are common to all scene files in the project. If a scene file or frame-specific calibration value is uploaded, it takes priority over the common calibration value.

When setting Calibration common settings

remoteUrl must be entered as follows depending on the camera type.

- Lidar To Cam, Cuboid, Pinhole camera

remoteUrl: https://saas-dp-api.bluewhale.team/sensor-fusion/lidar-to-camera/cuboid/pinhole - Lidar To Cam, Cuboid, Fisheye camera

remoteUrl: https://saas-dp-api.bluewhale.team/sensor-fusion/lidar-to-camera/cuboid/fisheye

If you have any other inquiries, please get in touch with us at [email protected]

Updated 10 months ago